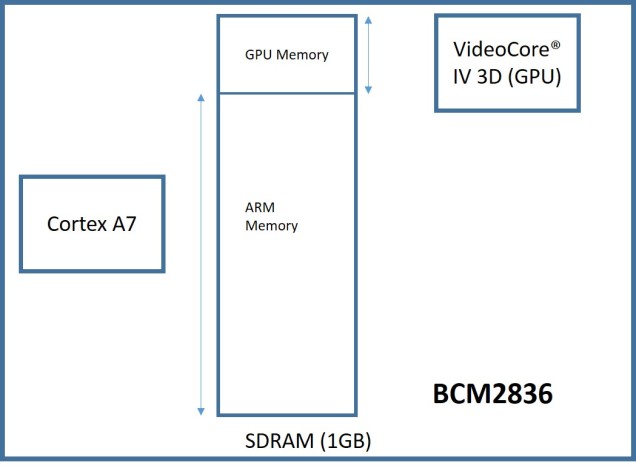

Raspberry Pi 2 is powered by a Broadcom BCM2836 chipset. Along with the typical peripheral IP blocks like UART, SD, etc, BCM2836 ASIC integrates four ARM Cortex A7 cores and a graphics processing engine. The primary intent of this post is to elaborate the ARM – GPU inter-processor communication (IPC) mechanism. Essentially to explain the hardware and the software blocks involved in establishing a messaging channel between these two processors.

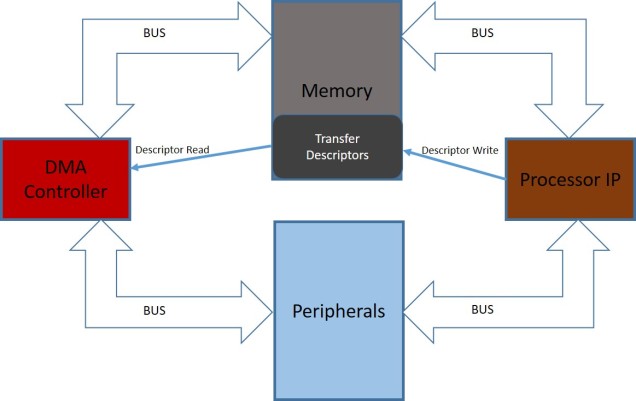

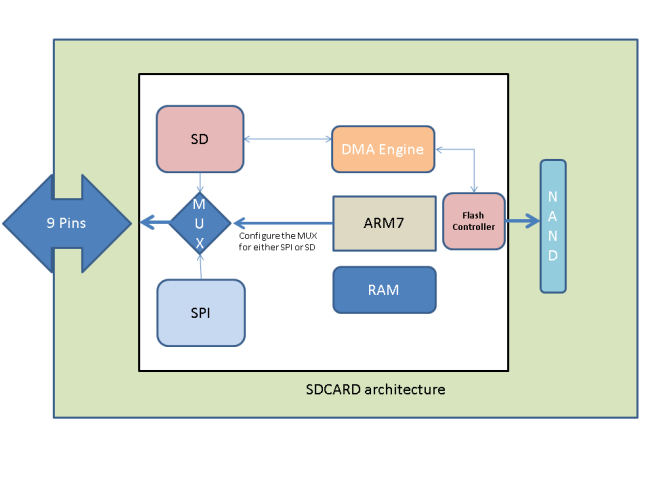

The basic infrastructure is illustrated by the diagram given below, we have the ARM processor and the VideoCore engine with a shared SDRAM. Note that the SDRAM would be an off chip module, so automatically there will be an external bus interface involved here. With these multiple processors and an SDRAM, the obvious IPC mechanism would be shared memory. But before getting into more details let’s have a brief overview of the RPi 2 boot.

Boot Process

BCM2836 boot binaries are located in the FAT formatted micro SD Card. Binaries for both GPU and ARM are in the FAT root directory. Once powered up, the very first boot step would be the execution of BCM2836 on-chip boot ROM, that would fetch second level bootloader which would in turn boot the GPU binary from SD. It’s GPU binary which is responsible for loading the ARM executable. More details about boot is can be read here, but for now we can crudely summarize the steps in the following manner.

- Power ON.

- BCM2836 BOOT ROM fetches second level bootloader.

- Second level boot loader fetches GPU binary.

- GPU binary loads the ARM image and transfers control.

So in this post we are looking to explain certain aspects of the ARM IPC code which is essential for communicating with the GPU. Please note that the second level bootloader and GPU binary images can be fetched from the GIT Hub repository. Now, let’s attempt to establish the fundamentals of this ARM-GPU IPC.

Shared Memory

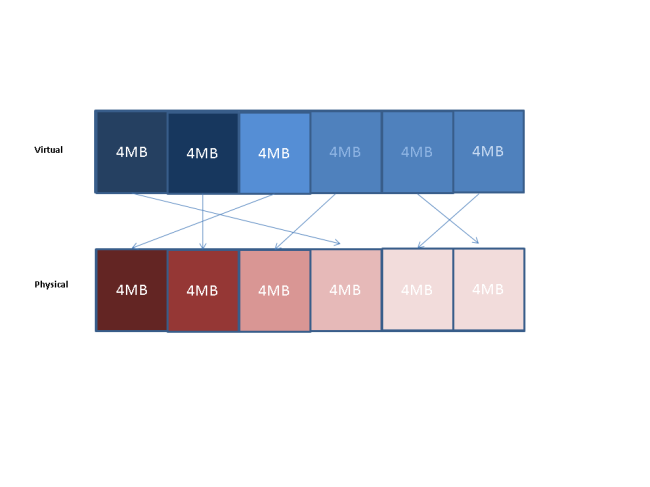

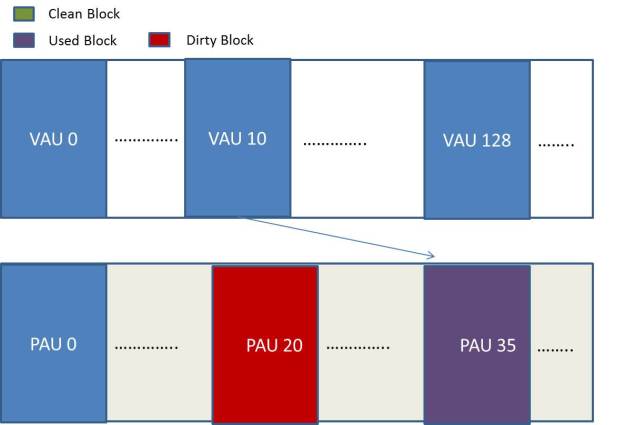

To drive the graphics framebuffer or to accomplish any such task involving both ARM and GPU processing units, we require some meaningful message exchanges between these cores. For that we would use the shared SDRAM. But note that ARM-GPU combination is an asymmetric multiprocessing environment, so first we need to establish the processor level split for this 1G SDRAM. Basically this defines how much RAM is dedicated to each of these cores. This division is defined in “config.txt” configuration file located in the SDCARD, the relevant configuration parameter is mentioned below.

# GPU – CPU Split

gpu_mem=64

Note that “config.txt” is read by GPU during the boot process.

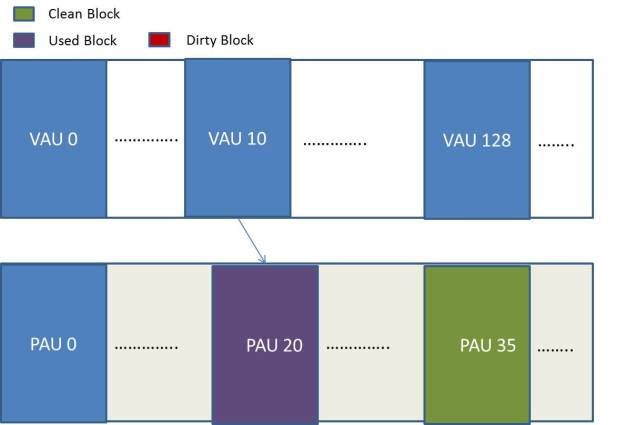

According to the documentation, the above “gpu_mem=64” setting should ideally allocate the top 64MB (address 0x3c000000 — 0x3FFFFFFF) of the SDRAM to GPU and the rest to ARM. But in reality this seems to be not the case, and the top 80MB is actually being taken by GPU and the bottom 944MB goes to ARM. Had to figure this out the hard way by messaging the GPU for its memory configuration, more on this later. Now the final high level split is illustrated below:

So now the binaries running on both ARM and GPU realize their own boundaries. Which is great, we cannot afford any form of cannibalism. Now we can introduce the next level of IPC synchronization — the Mailbox mechanism. This peripheral helps ARM and GPU communicate the exact location of the shared memory address where the larger shared message structures can be read or written.

The basic idea is simple; one Mailbox is for reading and the other for writing! Mailbox-0 read by Cortex A7 would be written by GPU, similarly GPU would read the Mailbox-1 which in turn would be written by A7. These 32-bit Mailbox registers are ideal for communicating that shared SDRAM memory address meant for ARM — GPU message exchanges.

Mailboxes

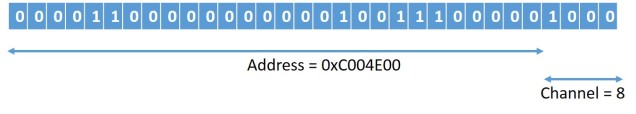

The four least significant bits (nibble) of the 32-bit address communicated through the message box is reserved for clarifying the Mailbox channel. This channel gives an indication of how to interpret the data structure at that transmitted address location. Note that each channel tends to have its own message structure, this implements a form of multiplexing over the same mailbox interface.

More details regarding these channels can be read here. Note that reserving the last 4 bits for channel number would also mean that the transmitted address has to be always aligned at 16 byte boundaries. But this seems more or less insignificant, because if the ARM Cortex A7 L1 cache is enabled, then to manage coherency we would anyway want buffers to be aligned at 64 byte cache line boundaries. Also, such shared buffers getting cached in L1 should be flushed before transmission and invalidated after it’s written by the GPU. Of course, all the shared memory address exchanges has to be physical bus address values, not virtual, otherwise they would make no sense.

An example of a 32-bit address transmitted over Mailbox channel 8 is illustrated above. Note that this encoding of the channel within the least significant nibble is a software mechanism. To the hardware these are mere 32bit values. Also, the channel number 8 which communicates property tags would be the one we use here for illustrating higher level data structures of the IPC. Now for some grisly register map details.

/* Register access helper */ #define REG(x) (*(volatile unsigned int *)(x)) /* Mailbox 0 base address (Read by ARM) */ #define MBOX0_BASE 0x3F00B880 /* Mailbox 1 base address (Read by GPU) */ #define MBOX1_BASE 0x3F00B8A0 /* ** Mailbox Controller Registers */ /* I/O register is at the base address */ #define MBOX_RDWR(x) REG((x)) /* Status register at the offset 0x18 from base */ #define MBOX_STATUS(x) REG((x) + 0x18) /* Status register value when mailbox is full/empty */ #define MBOX_FULL 0x80000000 #define MBOX_EMPTY 0x40000000 /* Interrupt configuration register */ #define MBOX_CONFIG(x) REG((x) + 0x1C) /* Configuration register mask to enable mailbox data IRQ */ #define MBOX_DATAIRQEN 0x00000001

For a successful mailbox transaction we only need the above three hardware registers and the associated bit masks.

- MBOX_RDWR : I/O register to transfer the 32-bit value

- MBOX_STATUS : Status register to synchronize the communication

- MBOX_CONFIG: Configuration register to enable interrupt generation

Below we have a write sequence transmitting a 32-bit value from ARM to GPU :

/* 1. Enable Mailbox0 interrupt to catch the GPU response, this is optional if the read is going to happen in polled mode. */ MBOX_CONFIG(MBOX0_BASE) |= MBOX_DATAIRQEN; /* 2. Before writing, loop until there is space available in Mailbox1. This step may be optional if the code always waits for a full transaction to complete. */ while (MBOX_STATUS(MBOX1_BASE) & MBOX_FULL); /* 3. Write the 32 bit address into the Mailbox1 I/O register */ MBOX_RDWR(MBOX1_BASE) = ui32_address | channel;

Once ARM transmits the 32-bit value over Mailbox1, next step would be about waiting for a response on Mailbox0 written by GPU. Reading this 32 bit GPU response would mandate us to first wait for the interrupt and then read the MBOX_RDWR register.

/* 1. Now that the interrupt has fired, disable it. */ MBOX_CONFIG(MBOX0_BASE) &= ~MBOX_DATAIRQEN; /* 2. Read the Mailbox 0 I/O register */ ui32_value = MBOX_RDWR(MBOX0_BASE);

Instead of interrupt, it’s also possible to poll on the Mailbox status register. Just wait looping for the bit MBOX_EMPTY to be reset.

/* 1. Poll the status */ while (MBOX_STATUS(MBOX0_BASE) & MBOX_EMPTY); /* 2. Read the Mailbox 0 I/O register */ ui32_value = MBOX_RDWR(MBOX0_BASE);

Additionally we should also add the check to validate that the response is indeed for the expected channel.

do {

/* 1. Poll the status */

while (MBOX_STATUS(MBOX0_BASE) & MBOX_EMPTY);

/* 2. Read the Mailbox 0 I/O register */

ui32_value = MBOX_RDWR(MBOX0_BASE);

} while ((ui32_val & 0xF) != channel)

Now we have established a Mailbox based synchronization mechanism for both the cores to communicate shared memory addresses.

Data Structure

Mailbox merely enables the processors to transmit buffer pointers. The structure of this buffer contents would depend on the channel. Detailed specification of the shared memory data structures used for Mailbox channel 8 is linked here. Below we have a top level overview of that Channel 8 packet:

/* Packet HEADER */

struct header

{

ui32 packet_length; // size in bytes (including header and values )

/*

** Request codes:

** 0x00000000: process request

** All other values reserved

** Response codes:

** 0x80000000: request successful

** 0x80000001: error parsing request buffer

** (partial response)

*/

ui32 req_resp_code;

};

/* Packet CONTENTS */

struct tags

{

ui32 id; // id

ui32 sz; // value size in bytes

ui32 req_resp; // request/response code

ui32 value[BUF_LEN]; // value length varies for each tag

};

/* Channel 8 Packet */

struct packet

{

struct header pheader;

struct tags ptags[NUMS];

};

Please note that the above illustration is to merely bring clarity to the high level structure of this packet. In reality it’s best to implement the packet generation using a ui32 array instead of the above structures.

The “struct packet” is essentially a header + sequence of tags, these tags are associated with a particular request like “Get VC memory” or “Get ARM memory”. In fact, these two tags were used to figure out the previously mentioned discrepancy of 64MB v/s 80MB in the ARM-GPU SDRAM split.

As mentioned in the link, these tag structures would be populated with requests by ARM, and GPU will overwrite them with responses. For example, “Allocate buffer” tag request would make GPU return a frame buffer address. After that, a valid frame written to this location will also get displayed on an attached HDMI monitor. We can even have multiple frames buffers and switch across them using “Set virtual offset” tag. Response time for this operation seems to be deterministic and around 300uS, which is good, otherwise we would end up having frame lags.

References:

- Linux Mailbox Driver

- Valvers : Graphics Basic

- GIT Hub GPU/Bootloader Repository

- Valvers : Bare Metal Programming in C Pt1

- Rpi2 config.txt documentation.

- Broadcom BCM2835 Peripheral Reference Manual

- Mailbox Property Interface

- VideoCore® IV 3D Architecture Reference Guide

- BCM2835 Errata