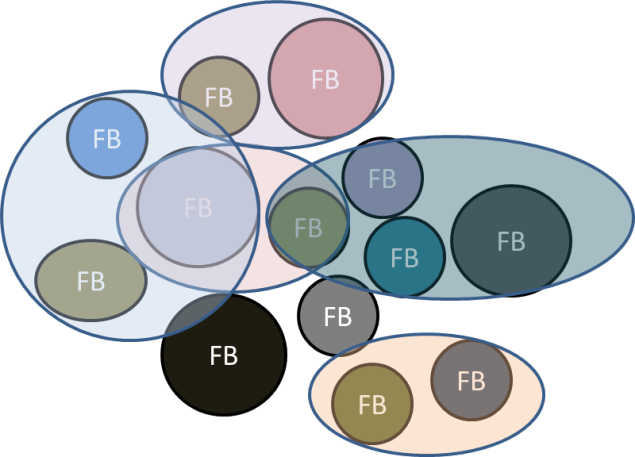

A little perspective on the whole process at work; computer science engineering could be perceived as a process of discovering various productive arrangements of functional modules (or functional blocks). Such an abstract module can be expressed as a self contained construction with two primary attributes, one is of course its functionality and the other is the interface rules regarding how inputs can be furnished and how its processed output can be channeled out. Indeed these two qualitative attributes span both the software and the hardware paradigms, easily illustrated by the below diagram.

The distinction between software and hardware is merely with regards to the implementation methodology, both are conceptually an integration of abstract modules communicating via shared rules to achieve a larger purpose.

Software comprises of a network of functional modules interfaced via recognizable data structures, usually such functional blocks are stacked with processed output of one being channeled as an input to another. The high level module interfaces may be standardized, like in the case of an operating system or a file system the APIs tend to be Posix standard compliant, but that is never the case for the internal functions of a larger complex module. Even at the assembly language level, we can view the instruction byte code acting as an interface to the processor where the structure of the particular object code conveys the intended operation and the corresponding operands. Once the program decoder comprehends the object code, the requisite hardware blocks like ALU or the Load/Store unit will be signaled to complete the instruction code processing. Eventually the behavior of a generic processor unit will depend on the sequence and also the content of the application’s object code.

Hardware design is also a similar arrangement of functional modules, the high level IP blocks are usually interlinked by generic bus protocols (like AMBA, SD, PCI, USB etc). Quite analogous to the software implementation, each hardware functional block samples a recognizable input signal pattern compliant with a mutually understood bus protocol and then channels the processed output to a similar or a different genre of interface bus depending on the functionality of that particular hardware logic. For example, a DMA hardware interfacing with two different types of RAM memory blocks will need two types of port interfaces, each dedicated to a particular RAM interface. A unified abstract representation of a functional block is attempted below:

Functional Blocks — At a highly abstract level, computer science engineering is either about designing such functional blocks, or about integrating them into coherent wholes or both. So eventually an electronic product can be perceived as an organization of abstract functional modules communicating via shared interface rules performing complex use cases valuable to its end user. Higher levels of human cognition recognizes objects only in terms of it’s abstract qualities and not by remembering its numerous gritty details — so a process intended for engineering a complex system will need to harness specialized detailed knowledge which is essentially dispersed across many individuals and the functional modules they implement.

For instance, the application writer cannot be expected to comprehend file system representation of a data on the hard disk and similarly a middle-ware engineer can afford to be ignorant of device driver read/write protocols as long as the driver module behaves according to the documented interface rules. In other words, an integration engineer need to know only the abstract functionality of modules and their corresponding interface related rules to logically arrange them for delivering a product, thus by lowering the knowledge barrier we reduced the cost and time to market. Functional block implementation challenge is more or less about finding the optimal balance of imparting the abstract generic modular quality while remaining withing the boundaries of requisite performance and code complexity.

Open-source framework accentuates certain advantages of the above mentioned modular construction. Proprietary systems evolve only among a known set of individuals who coordinate their expertise using institutions which exist within a closed consortium, but open source leverages on a larger extended order which enables the utilization of expertise possessed by known and unknown individuals . So from the development perspective it harnesses the expertise of a larger group and at the same time does much better coordination of their combined activities towards a productive use.

Market economics is about finding the most productive employment of time and resources, in our case it would be about discovering all the possible uses of an abstract functional module. The knowledge accessible within the open-source market accelerates the discovery process and it also leverages on the modular construction for coordinating dispersed expertise. In other words, depending on their individual expertise any one can integrate new modules, improve or tailor the existing ones. For instance, a generic Linux kernel driver module might eventually end up on a sever or a TV or a Cell phone equipment depending on how that module is combined with other hardware specific modules, in other words this framework furthers the discovery and productive employment of already existing resources.

The above Venn diagrams illustrate how the nature of an order can influence the development and the arrangements of functional modules. With improved means of coordinating dispersed expertise, more creative and productive combinations will emerge. Indeed, what we attempt to solve is a genre of Hayekian knowledge problem!